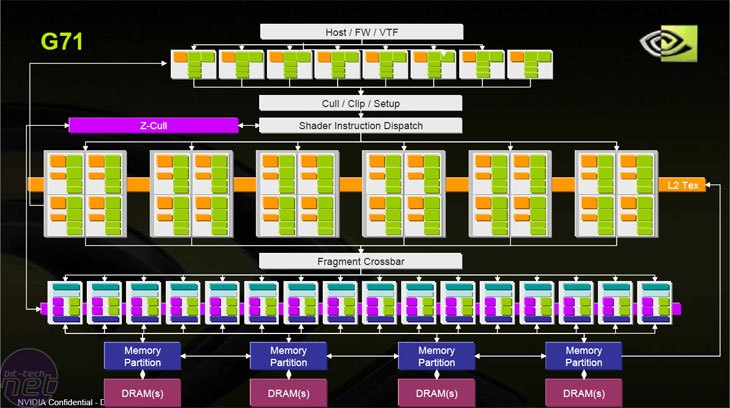

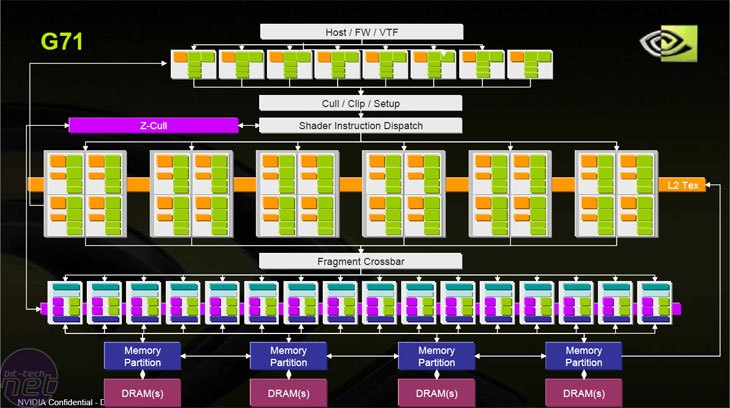

Not surprisingly, both GeForce 7900 GTX and GeForce 7900 GT use the same GPU - known internally as G71 - and unlike the GeForce 7800-series, both parts have the full 24 pixel pipelines, 8 vertex shaders and 16 pixel output engines. The difference between the two lies in clock speeds, cooling solutions and frame buffer size.

The standard clock speeds for the GeForce 7900 GTX are 650MHz core and it has 512MB of GDDR3 running at 1600MHz on a 256-bit memory interface (four 64-bit channels). On the other hand, GeForce 7900 GT comes with a 450MHz core clock and 256MB of GDDR3 running at 1320MHz. NVIDIA's G7x architecture is widely known to have more than one internal clock speed; NVIDIA tells us that the vertex shaders on GeForce 7900 GTX and 7900 GT are operating at 700MHz and 470MHz respectively. Both cards also come with dual-dual link DVI TMDS transmitters, meaning that the cards are capable of powering two Dell 3007WFP monitors at their native resolution of 2560x1600.

The internal layout of the GPU is the same, but they have improved the blending performance in the pixel output engines. With the GeForce 7800-series, the pixel output engines were capable of either an FP16 blend or a colour write each cycle, meaning that HDR techniques using the FP16 blending method had to take a second pass back through the pixel shader in order to write the pixel's colour value to memory. This is what has changed inside GeForce 7900-series, and also GeForce 7600 GT, too.

Rather than having to do an FP16 blend and then doing another pass in order to write the colour value to memory, it's possible for G71 to complete an FP16 blend and write colour values to memory without the requirement for an additional pass through the pixel shader. We ran some preliminary tests with GeForce 7900 GT and GeForce 7800 GTX running at the same clock speeds (430/1300MHz), and found that the 7900 GT was consistently a few frames per second faster than 7800 GTX when HDR was being used.

Rather than having to do an FP16 blend and then doing another pass in order to write the colour value to memory, it's possible for G71 to complete an FP16 blend and write colour values to memory without the requirement for an additional pass through the pixel shader. We ran some preliminary tests with GeForce 7900 GT and GeForce 7800 GTX running at the same clock speeds (430/1300MHz), and found that the 7900 GT was consistently a few frames per second faster than 7800 GTX when HDR was being used.

Unfortunately, NVIDIA hasn't improved on the current state of play because it's still impossible to execute an FP16 blend while using multisampled antialiasing patterns. However, there are many alternative ways to make HDR and antialiasing work together. Half-Life 2: Lost Coast is an example of this, as is Age of Empires III.

It's possible to do things a little differently, meaning that the high dynamic range pass is created without using an FP16 blend - developers who are on the ball will code two paths - one that gives full FP16 HDR and MSAA support for ATI cards and an alternative method that allows HDR and MSAA to work together on NVIDIA's current hardware.

Secondly - this was probably our biggest disappointment with the new GPUs - is the lack of improvements made to NVIDIA's texture filtering hardware. Anisotropic filtering still utilises an angle-dependant algorithm that only fully filters at 45 degree and 90 degree angles. The limitation is a hardware one, so the only way to improve filtering quality is to disable the filtering optimisations that are present inside NVIDIA's driver control panel.

They've clocked it at 560MHz core, with the vertex shader running at the same speed, while the 256MB of GDDR3 memory is running at an impressive 1400MHz effective. Unfortunately, the memory interface is also limited to 128-bit - two 64-bit channels - meaning that memory bandwidth is limited to 22.4GB/sec. However, that's more bandwidth than was available to the Radeon 9700 when that was the flagship part in the second half of 2003.

The standard clock speeds for the GeForce 7900 GTX are 650MHz core and it has 512MB of GDDR3 running at 1600MHz on a 256-bit memory interface (four 64-bit channels). On the other hand, GeForce 7900 GT comes with a 450MHz core clock and 256MB of GDDR3 running at 1320MHz. NVIDIA's G7x architecture is widely known to have more than one internal clock speed; NVIDIA tells us that the vertex shaders on GeForce 7900 GTX and 7900 GT are operating at 700MHz and 470MHz respectively. Both cards also come with dual-dual link DVI TMDS transmitters, meaning that the cards are capable of powering two Dell 3007WFP monitors at their native resolution of 2560x1600.

What has changed:

Despite the rumours that have been going around regarding G71 being a die shrink of G70, it's more than just a G70 die shrink as there are some improvements made to the pixel output engines. In fact, G71 has less transmitters than G70 - which had around 302 million. G71 has only 278 million transistors, meaning that NVIDIA has been able to architect the GPU in a more efficient manner. That appears to be the whole focus of this architecture.The internal layout of the GPU is the same, but they have improved the blending performance in the pixel output engines. With the GeForce 7800-series, the pixel output engines were capable of either an FP16 blend or a colour write each cycle, meaning that HDR techniques using the FP16 blending method had to take a second pass back through the pixel shader in order to write the pixel's colour value to memory. This is what has changed inside GeForce 7900-series, and also GeForce 7600 GT, too.

What hasn't changed:

At the moment, the talk of the town is HDR lighting. When ATI announced the Radeon X1000-series, they introduced an architecture that was capable of using both FP16 blending for HDR and multisampled antialiasing at the same time. This was something that NVIDIA's GeForce 7800-series cards were unable to achieve.Unfortunately, NVIDIA hasn't improved on the current state of play because it's still impossible to execute an FP16 blend while using multisampled antialiasing patterns. However, there are many alternative ways to make HDR and antialiasing work together. Half-Life 2: Lost Coast is an example of this, as is Age of Empires III.

It's possible to do things a little differently, meaning that the high dynamic range pass is created without using an FP16 blend - developers who are on the ball will code two paths - one that gives full FP16 HDR and MSAA support for ATI cards and an alternative method that allows HDR and MSAA to work together on NVIDIA's current hardware.

Secondly - this was probably our biggest disappointment with the new GPUs - is the lack of improvements made to NVIDIA's texture filtering hardware. Anisotropic filtering still utilises an angle-dependant algorithm that only fully filters at 45 degree and 90 degree angles. The limitation is a hardware one, so the only way to improve filtering quality is to disable the filtering optimisations that are present inside NVIDIA's driver control panel.

G73:

GeForce 7600 GT - known internally as G73 - has 5 vertex shaders, 12 pixel shaders and 8 pixel output engines. Aside from the vertex shaders, you could say that the GPU is half of G71. The pixel output engines have the same improvements so it's possible to complete an FP16 blend and writing a colour to memory in the same pass.They've clocked it at 560MHz core, with the vertex shader running at the same speed, while the 256MB of GDDR3 memory is running at an impressive 1400MHz effective. Unfortunately, the memory interface is also limited to 128-bit - two 64-bit channels - meaning that memory bandwidth is limited to 22.4GB/sec. However, that's more bandwidth than was available to the Radeon 9700 when that was the flagship part in the second half of 2003.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.